Ethics as PR (or the ‘Some Very Good People Work There!’ Fallacy)

“Ethics in AI”

There’s been a lot of research into “Ethics in AI” recently and it’s being sponsored and led by… *checks notes*… surveillance capitalists like DeepMind1.

Similarly, lots of people are working on “Ethics in Health” at Philip Morris and “Ethics in Environmentalism” at ExxonMobil.

OK, I made the last two up.

Did you notice?

(I guess it was pretty blatant.)

Because, clearly, that would be ethicswashing.

So how come we accept the first?

If someone told you they were working on environmental ethics at ExxonMobil, they would get laughed out of the room. Yet folks who work on “Ethics in AI” at surveillance capitalists like Google and Facebook are invited to talk to policymakers and sit at the table when crafting privacy and other technology regulation.

Environmental ethics at ExxonMobil

The only way I can make sense of all this is that we don’t see a Google or a Facebook as being as bad as a Philip Morris or an ExxonMobil.

I’ve been trying to explain why they are just as bad for the past seven years and I don’t know what more there is I can say.

My goal here isn’t to point fingers at the people who take these positions (although, goodness knows, if you have an alternative, please take it), but at the corporations themselves who are creating the positions to begin with as a cynical part of their lobbying and public relations initiatives.

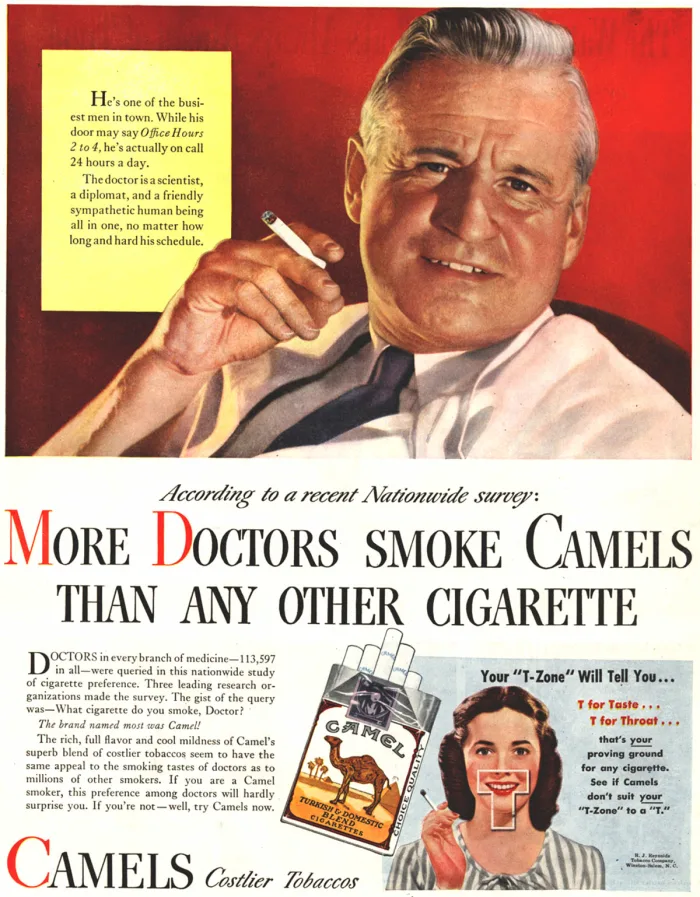

Big Tech is following Big Tobacco’s rule book to the letter and it seems to be working splendidly so far.

This is why we can’t regulate effectively.

“The truly damning evidence of Big Tobacco’s behavior only came to light after years of litigation. However, the parallels between the public facing history of Big Tobacco’s behavior and the current behavior of Big Tech should be a cause for concern … We believe that it is vital, particularly for universities and other institutions of higher learning, to discuss the appropriateness and the tradeoffs of accepting funding from Big Tech, and what limitations or conditions should be put in place.”

– Mohamed Abdalla (PhD student, University of Toronto Center for Ethics) and Moustafa Abdalla (student, Harvard Medical School) (What Big Tech and Big Tobacco research funding have in common)

Institutional corruption

We are institutionally corrupt.

In addition to the massive influence of lobbying, revolving doors, and sponsorships, we also have corporate capture of academia.

Thanks to this neoliberal success story, university departments today are left at the mercy of funding by industry. If the only way an academic in the field can progress is to work with or secure funding from a Big Tech company that is not necessarily the academic’s fault, per se, but a systemic failure.

This is not to say that we should disregard those who make sacrifices to go against the grain and vocally oppose and refuse to take part in this system. On the contrary, we should be celebrating and supporting them.

“During a panel conversation, Black in AI cofounder Rediet Abebe said she will refuse to take funding from Google, and that more senior faculty in academia need to speak up. Next year, Abebe will become the first Black woman assistant professor ever in the Electrical Engineering and Computer Science (EECS) department at UC Berkeley.

‘Maybe a single person can do a good job separating out funding sources from what they’re doing, but you have to admit that in aggregate there’s going to be an influence. If a bunch of us are taking money from the same source, there’s going to be a communal shift towards work that is serving that funding institution,’ she said.”

– What Big Tech and Big Tobacco research funding have in common (emphasis mine)

When PR backfires

Big Tech is not infallible.

Far from it.

When ethicswashing goes wrong, it can backfire spectacularly and become a PR nightmare as we saw with the example of the ethicists hired by Google who got fired when they didn’t reach the conclusions the corporation wanted them to.

This is a relationship that is destined to fail as long as an ethicist does what their job title says instead of what the corporation wants them to do. Because surveillance capitalists like Google are fundamentally unethical.

And while fallout like this can undo the positive PR that Google is trying to cultivate, the presence of respected people in these positions is often all that’s necessary for the corporation to benefit from their legitimacy.

Maybe what we need is Big Tech’s own Surgeon General’s Reports moment. But that’s not going to be likely if the Surgeon General works for Philip Morris.

Greta Thunberg would never work at ExxonMobil

Being a privacy or AI ethicist at a Silicon Valley Big Tech company is the same as being an environmental ethicist at ExxonMobil: your very presence there legitimises the corporation.

There is a reason Greta Thunberg would never work at ExxonMobil.

There is a reason doctors would not work at Philip Morris.

There is a reason pacifists would never join the military.

Because no matter how well-intentioned you are, you are not going to change the fundamental nature of these institutions.

For corporations, their fundamental nature is dictated by their business model. For surveillance capitalists, the business model is based on mass violations of your privacy that are used to profile you with the goal of understanding, predicting and affecting your behaviour for profit. This is a fundamentally unethical business model within a fundamentally unethical socioeconomic system.

These corporations will employ you for as long as they can launder their reputations by using your legitimacy. And when you become too problematic, they will spit you right out again.

The sooner we all realise this, the sooner we tell them “we see what you’re doing”, the sooner no one else has to go through this. Because this isn’t good for anyone involved.

‘Ethics’, as far as a surveillance capitalist is concerned, is public relations.

And I know that’s not what anyone signed up for.

Lots of very good people…

Whenever there’s criticism of a surveillance capitalist like Google or Facebook, someone always says “but I know lots of very good people who work there.”

I don’t doubt it for a minute.

Heck, I know lots of very good people who work there!

In fact, the more wonderful they are, the more legitimacy they add to the unethical corporation they work at. It’s time we at least acknowledged that this is a problem even if we don’t have all the solutions yet.2

Acknowledging the problem is the first step in making these corporations socially unacceptable so we can effectively regulate them and secure funding for alternatives. Alternatives not just in technology but also in academia where these good folks can carry out truly independent research for the common good.

Eric Schmidt once told me “if we ever get too evil, we won’t be able to find anyone to work for us.”

I guess either he was wrong or we just don’t think they’re evil enough yet.

Like this? Fund us!

Small Technology Foundation is a tiny, independent not-for-profit.

We exist in part thanks to patronage by people like you. If you share our vision and want to support our work, please become a patron or donate to us today and help us continue to exist.

-

Owned by Alphabet, Inc., the same multi-trillion-dollar corporation that owns Google, YouTube, etc. ↩︎

-

And realise, I’m not talking about the folks who work in the cafeteria at Google but the ones with PhDs that sit on policymaking committees and the engineers on six-figure salaries with stock options that keep the machine ticking; the latter being the ones who might have other options even within this capitalist hellscape that we find ourselves mired in. ↩︎